Much of the data on which policy and program decisions are based come from stakeholder surveys. A new brief from Mathematica Policy Research showcases how behavioral science can serve to boost call-in rates to these surveys and reduce data collection costs.

Convincing people to respond to surveys has grown increasingly difficult with the rise of do-not-call lists and the abandonment of landlines. Intensified efforts to locate and engage potential respondents can help on this front, but these approaches are expensive. Mathematica’s strategy on the National Beneficiary Survey conducted for the Social Security Administration, used behavioral science to address these issues. The study team tested different versions of a letter mailed at the start of data collection to determine whether a particular version was more effective at spurring sample members to call Mathematica to complete the interview before the team launched more intensive efforts to gain cooperation.

All sample members received an advance letter seven days before the start of the telephone interviewing. In addition to the original version of this standard letter, the team created two other versions that used slightly different approaches to encourage participation. One version had more personally relevant language, and another provided more concrete directions about what the recipient should do next. All described the background and purpose of the study, addressed privacy and confidentiality concerns, and offered a gift card for completing the survey.

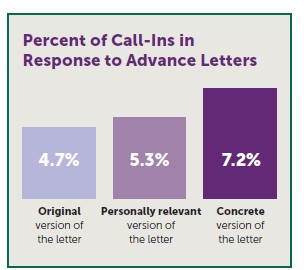

The concrete version of the letter, which used principles from behavioral science to condense several possible steps in the original letter into a clear action step to take within a stated time frame, generated the highest percentage (7.2 percent) of call-ins—more than 50 percent greater than the control condition (4.7 percent).

The concrete version of the letter, which used principles from behavioral science to condense several possible steps in the original letter into a clear action step to take within a stated time frame, generated the highest percentage (7.2 percent) of call-ins—more than 50 percent greater than the control condition (4.7 percent).

“This experiment shows that by making small changes to language and framing options for sample members to take action in a specific and tailored way, researchers can influence the behavior of their survey samples and increase the likelihood of response,” notes Mathematica Senior Fellow Amy Johnson. “In turn, this can help to contain costs on national surveys that have large samples and need high response rates.”

Read the brief: “Using Behavioral Science to Improve Survey Response: An Experiment with the National Beneficiary Survey.”